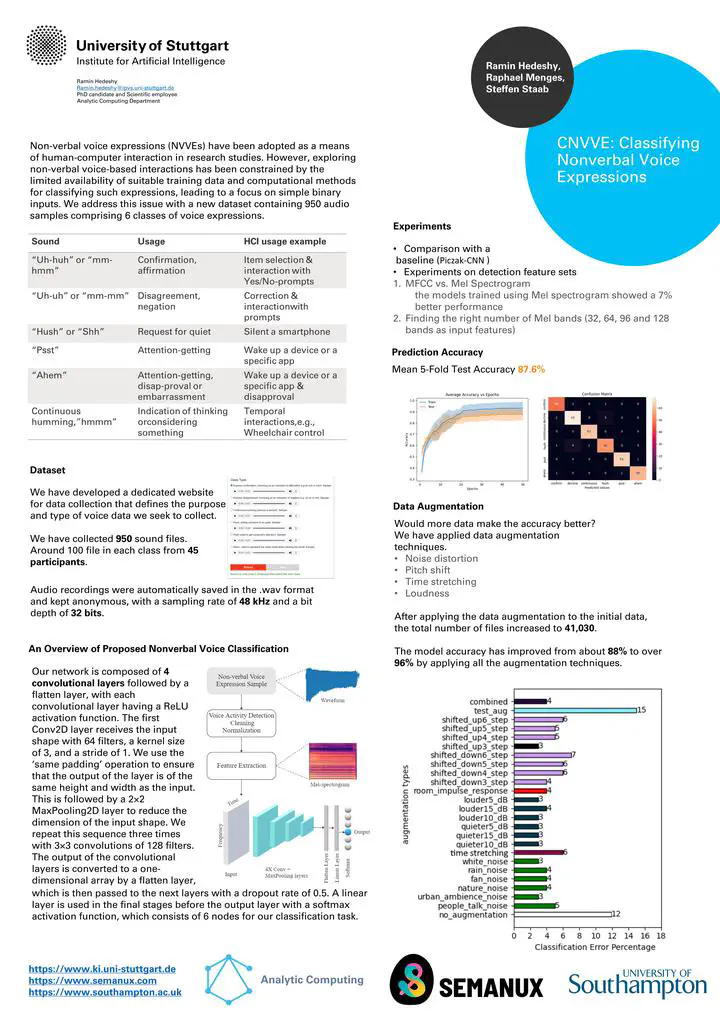

CNVVE: Dataset and Benchmark for Classifying Non-verbal Voice Expressions

Abstract

Non-verbal voice expressions (NVVEs) have been adopted as a means of human-computer interaction in research studies. However, exploring non-verbal voice-based interactions has been constrained by the limited availability of suitable training data and computational methods for classifying such expressions, leading to a focus on simple binary inputs. We address this issue with a new dataset containing 950 audio samples comprising 6 classes of voice expressions. The data were collected from 42 speakers who donated voice recordings. The classifier was trained on the data using features derived from mel-spectrograms. Furthermore, we studied the effectiveness of data augmentation and improved over the baseline model accuracy significantly with a test accuracy of 96.6% in a 5-fold cross-validation. We have made CNVVE publicly accessible in the hope that it will serve as a benchmark for future research.